Kyn-elwynn - Second Home

More Posts from Kyn-elwynn and Others

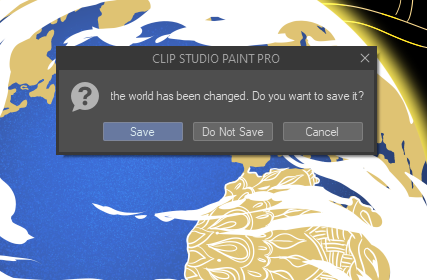

when your art program’s closing message hits you straight in the heart and makes you stop and contemplate the state of it all

obsessed with how fixable society is, on a structural level.

obsessed with how all you need to do is throw money at public education and eliminate most standardized testing and you will start getting smarter, more engaged, kinder adults. obsessed with how giving people safe housing, reliable access to good food, and decent wages dramatically reduces drug overdoses and gun violence. obsessed with how much people actually want to get together and fix infrastructure, invent new ways of helping each other, and create global ways of living sustainably once you give them livable pay to do so. obsessed with how tracking diseases, developing medicines, and improving public health becomes so much easier when you just make healthcare free at point of use.

obsessed with how easy it all becomes, if we can just figure out how to wrench the wealth out of the hands of the hoarders.

Why I don’t like AI art

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in CHICAGO with PETER SAGAL on Apr 2, and in BLOOMINGTON at MORGENSTERN BOOKS on Apr 4. More tour dates here.

A law professor friend tells me that LLMs have completely transformed the way she relates to grad students and post-docs – for the worse. And no, it's not that they're cheating on their homework or using LLMs to write briefs full of hallucinated cases.

The thing that LLMs have changed in my friend's law school is letters of reference. Historically, students would only ask a prof for a letter of reference if they knew the prof really rated them. Writing a good reference is a ton of work, and that's rather the point: the mere fact that a law prof was willing to write one for you represents a signal about how highly they value you. It's a form of proof of work.

But then came the chatbots and with them, the knowledge that a reference letter could be generated by feeding three bullet points to a chatbot and having it generate five paragraphs of florid nonsense based on those three short sentences. Suddenly, profs were expected to write letters for many, many students – not just the top performers.

Of course, this was also happening at other universities, meaning that when my friend's school opened up for postdocs, they were inundated with letters of reference from profs elsewhere. Naturally, they handled this flood by feeding each letter back into an LLM and asking it to boil it down to three bullet points. No one thinks that these are identical to the three bullet points that were used to generate the letters, but it's close enough, right?

Obviously, this is terrible. At this point, letters of reference might as well consist solely of three bullet-points on letterhead. After all, the entire communicative intent in a chatbot-generated letter is just those three bullets. Everything else is padding, and all it does is dilute the communicative intent of the work. No matter how grammatically correct or even stylistically interesting the AI generated sentences are, they have less communicative freight than the three original bullet points. After all, the AI doesn't know anything about the grad student, so anything it adds to those three bullet points are, by definition, irrelevant to the question of whether they're well suited for a postdoc.

Which brings me to art. As a working artist in his third decade of professional life, I've concluded that the point of art is to take a big, numinous, irreducible feeling that fills the artist's mind, and attempt to infuse that feeling into some artistic vessel – a book, a painting, a song, a dance, a sculpture, etc – in the hopes that this work will cause a loose facsimile of that numinous, irreducible feeling to manifest in someone else's mind.

Art, in other words, is an act of communication – and there you have the problem with AI art. As a writer, when I write a novel, I make tens – if not hundreds – of thousands of tiny decisions that are in service to this business of causing my big, irreducible, numinous feeling to materialize in your mind. Most of those decisions aren't even conscious, but they are definitely decisions, and I don't make them solely on the basis of probabilistic autocomplete. One of my novels may be good and it may be bad, but one thing is definitely is is rich in communicative intent. Every one of those microdecisions is an expression of artistic intent.

Now, I'm not much of a visual artist. I can't draw, though I really enjoy creating collages, which you can see here:

https://www.flickr.com/photos/doctorow/albums/72177720316719208

I can tell you that every time I move a layer, change the color balance, or use the lasso tool to nip a few pixels out of a 19th century editorial cartoon that I'm matting into a modern backdrop, I'm making a communicative decision. The goal isn't "perfection" or "photorealism." I'm not trying to spin around really quick in order to get a look at the stuff behind me in Plato's cave. I am making communicative choices.

What's more: working with that lasso tool on a 10,000 pixel-wide Library of Congress scan of a painting from the cover of Puck magazine or a 15,000 pixel wide scan of Hieronymus Bosch's Garden of Earthly Delights means that I'm touching the smallest individual contours of each brushstroke. This is quite a meditative experience – but it's also quite a communicative one. Tracing the smallest irregularities in a brushstroke definitely materializes a theory of mind for me, in which I can feel the artist reaching out across time to convey something to me via the tiny microdecisions I'm going over with my cursor.

Herein lies the problem with AI art. Just like with a law school letter of reference generated from three bullet points, the prompt given to an AI to produce creative writing or an image is the sum total of the communicative intent infused into the work. The prompter has a big, numinous, irreducible feeling and they want to infuse it into a work in order to materialize versions of that feeling in your mind and mine. When they deliver a single line's worth of description into the prompt box, then – by definition – that's the only part that carries any communicative freight. The AI has taken one sentence's worth of actual communication intended to convey the big, numinous, irreducible feeling and diluted it amongst a thousand brushtrokes or 10,000 words. I think this is what we mean when we say AI art is soul-less and sterile. Like the five paragraphs of nonsense generated from three bullet points from a law prof, the AI is padding out the part that makes this art – the microdecisions intended to convey the big, numinous, irreducible feeling – with a bunch of stuff that has no communicative intent and therefore can't be art.

If my thesis is right, then the more you work with the AI, the more art-like its output becomes. If the AI generates 50 variations from your prompt and you choose one, that's one more microdecision infused into the work. If you re-prompt and re-re-prompt the AI to generate refinements, then each of those prompts is a new payload of microdecisions that the AI can spread out across all the words of pixels, increasing the amount of communicative intent in each one.

Finally: not all art is verbose. Marcel Duchamp's "Fountain" – a urinal signed "R. Mutt" – has very few communicative choices. Duchamp chose the urinal, chose the paint, painted the signature, came up with a title (probably some other choices went into it, too). It's a significant work of art. I know because when I look at it I feel a big, numinous irreducible feeling that Duchamp infused in the work so that I could experience a facsimile of Duchamp's artistic impulse.

There are individual sentences, brushstrokes, single dance-steps that initiate the upload of the creator's numinous, irreducible feeling directly into my brain. It's possible that a single very good prompt could produce text or an image that had artistic meaning. But it's not likely, in just the same way that scribbling three words on a sheet of paper or painting a single brushstroke will produce a meaningful work of art. Most art is somewhat verbose (but not all of it).

So there you have it: the reason I don't like AI art. It's not that AI artists lack for the big, numinous irreducible feelings. I firmly believe we all have those. The problem is that an AI prompt has very little communicative intent and nearly all (but not every) good piece of art has more communicative intent than fits into an AI prompt.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/25/communicative-intent/#diluted

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

Half Goblin, half Hobbit.

Goblit.

Transphobes be like

Or water fountains, public washrooms, outdoors tables, etc, etc

babe. I know we’re all going thru a lot rn but I just wanna give u the heads up that sesame streets future is in jeopardy. hbo has chosen not to renew it for new episodes (a series that has been going since 1969) and the residents of 123 Sesame Street no longer have a home :(

Some colouring process if you want to create hands without outlines. And some more hands holding stuff. Click the link below the pictures to see the whole post on Instagram!

you know even if a homeless person or a starving person is in that position because of their own "bad decisions" i don't care. it doesn't matter. no supposed financial misstep is enough to condemn someone to homelessness or poverty.

and it's not even about keeping people “dumb” as it is about keeping people functionally illiterate. if they can barely read, they won't read those naughty books that might make them question the reality handed down to them. they won't have any historical context for the situation they are in or any knowledge of the struggles and victories that followed.

they won't read those books that cause the reader to feel empathy for somebody living a different walk of life from them. they won't read the policies of their chosen political parties or the statistical results of those policies or even the religious scripture in which they believe they're basing their decisions.

and if they can barely write, they will find it harder to transmit their own thoughts and ideas—even orally.

less literacy means people watch and listen to short sound bites and slogans and video clips over anything with depth or anything that brings context or nuance into the discussion. less literacy means creating a society of easy marks ready to be fooled, ready to be misled. less literacy means less ability to understand the scientific knowledge humanity has amassed and less ability to realize when they are being abused. less literacy means less imagination, lower attention spans, and lacking awareness.

-

knight-witch reblogged this · 2 months ago

knight-witch reblogged this · 2 months ago -

knight-witch liked this · 2 months ago

knight-witch liked this · 2 months ago -

teagrammy liked this · 2 months ago

teagrammy liked this · 2 months ago -

whimsytoon liked this · 2 months ago

whimsytoon liked this · 2 months ago -

melancholicscoundrel reblogged this · 2 months ago

melancholicscoundrel reblogged this · 2 months ago -

mikorinne reblogged this · 2 months ago

mikorinne reblogged this · 2 months ago -

winged-horrors reblogged this · 2 months ago

winged-horrors reblogged this · 2 months ago -

aligalsinnsyk liked this · 3 months ago

aligalsinnsyk liked this · 3 months ago -

edeathcushing liked this · 3 months ago

edeathcushing liked this · 3 months ago -

thedailydescent reblogged this · 3 months ago

thedailydescent reblogged this · 3 months ago -

lethalaeipathy reblogged this · 3 months ago

lethalaeipathy reblogged this · 3 months ago -

jurgenronaaz reblogged this · 3 months ago

jurgenronaaz reblogged this · 3 months ago -

gayowlsntitans reblogged this · 3 months ago

gayowlsntitans reblogged this · 3 months ago -

imemery liked this · 3 months ago

imemery liked this · 3 months ago -

h0rnypuppyd0g reblogged this · 3 months ago

h0rnypuppyd0g reblogged this · 3 months ago -

echmiadzin reblogged this · 3 months ago

echmiadzin reblogged this · 3 months ago -

proudownerofaflamingoshrine liked this · 3 months ago

proudownerofaflamingoshrine liked this · 3 months ago -

modernklutz007 liked this · 3 months ago

modernklutz007 liked this · 3 months ago -

fandomfaeofveryfewf4cks liked this · 3 months ago

fandomfaeofveryfewf4cks liked this · 3 months ago -

writergirl719 reblogged this · 3 months ago

writergirl719 reblogged this · 3 months ago -

amitybrightlights liked this · 3 months ago

amitybrightlights liked this · 3 months ago -

nono295 liked this · 3 months ago

nono295 liked this · 3 months ago -

corduroy-olive reblogged this · 3 months ago

corduroy-olive reblogged this · 3 months ago -

emzebra reblogged this · 3 months ago

emzebra reblogged this · 3 months ago -

angel-stiles17 liked this · 3 months ago

angel-stiles17 liked this · 3 months ago -

emptywinner reblogged this · 3 months ago

emptywinner reblogged this · 3 months ago -

potentpinion-blog liked this · 4 months ago

potentpinion-blog liked this · 4 months ago -

adanotron reblogged this · 4 months ago

adanotron reblogged this · 4 months ago -

yenoodlethings liked this · 4 months ago

yenoodlethings liked this · 4 months ago -

the-real-kilgore-trout liked this · 4 months ago

the-real-kilgore-trout liked this · 4 months ago -

harbingeroffaete liked this · 4 months ago

harbingeroffaete liked this · 4 months ago -

emeraldmachinevoid liked this · 4 months ago

emeraldmachinevoid liked this · 4 months ago -

thelastmuppet liked this · 4 months ago

thelastmuppet liked this · 4 months ago -

thelastmuppet reblogged this · 4 months ago

thelastmuppet reblogged this · 4 months ago -

dustin-robert reblogged this · 4 months ago

dustin-robert reblogged this · 4 months ago -

ardri-na-bpiteog liked this · 4 months ago

ardri-na-bpiteog liked this · 4 months ago -

the-raddest-bat reblogged this · 4 months ago

the-raddest-bat reblogged this · 4 months ago -

oran-juice reblogged this · 4 months ago

oran-juice reblogged this · 4 months ago -

oran-juice liked this · 4 months ago

oran-juice liked this · 4 months ago -

adulthumanproblem reblogged this · 4 months ago

adulthumanproblem reblogged this · 4 months ago -

devonda81 reblogged this · 4 months ago

devonda81 reblogged this · 4 months ago -

devonda81 liked this · 4 months ago

devonda81 liked this · 4 months ago -

commiecottoncandy liked this · 4 months ago

commiecottoncandy liked this · 4 months ago -

myanxietea liked this · 4 months ago

myanxietea liked this · 4 months ago -

siaundae reblogged this · 4 months ago

siaundae reblogged this · 4 months ago -

bleumoonchild liked this · 4 months ago

bleumoonchild liked this · 4 months ago -

ratking-in-nyc-subway liked this · 4 months ago

ratking-in-nyc-subway liked this · 4 months ago -

a-half-empty-girl liked this · 4 months ago

a-half-empty-girl liked this · 4 months ago